Personal Notes

Personal notes of things I have to remember for a pure theory exam, please ignore.

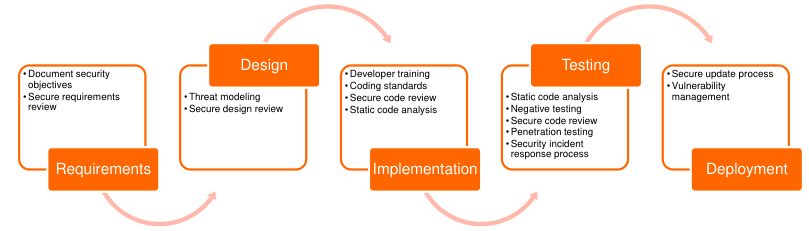

1. Unit 1: Secure Development Lifecycle

Learn each step with details

2. Unit 2: Threat Modelling and Risk Analysis

2.1. Definitions of Threat, Risk & Vulnerability:

- Threat: A potentially successful attack Risk: The potential for harm or damage, measured as the combination of the likelihood x impact

- Vulnerability: A weakness identified in the product for which an exploit does exist

2.2. Threat Modelling

Threat Modeling answers:

- [Assets] What assets need to be protected

- [Threats] What are the threats to these assets

- [Risk] How important or likely is each threat?

- [Fix] How can we mitigate the threats?

Decompose -> Identify Threats -> Analyze Risks -> Mitigate Risks -> Validate

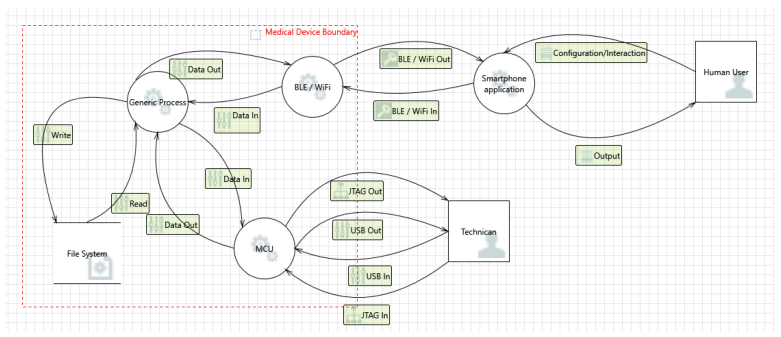

Decompose: What are we building? Identify assets and Actors, we use a graphical representation with Data Flow Diagram.

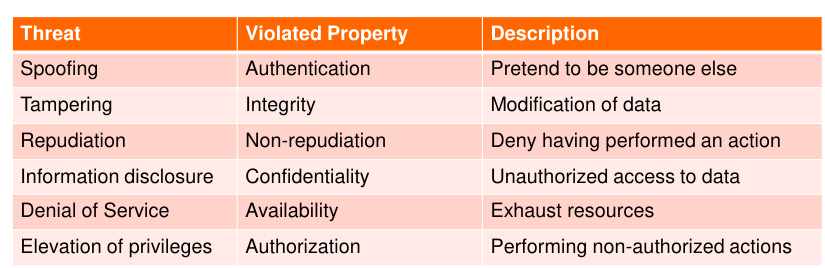

Identify Threats: Get the Data Flow Diagram and ask what can go wrong, ask questions. Use STRIDE or Attack Trees for guidance.

STRIDE:

- **S**poofing

- **T**ampering

- **R**epudiation

- **I**nformation Disclosure

- **D**enial of Service

- **E**levation of Privileges

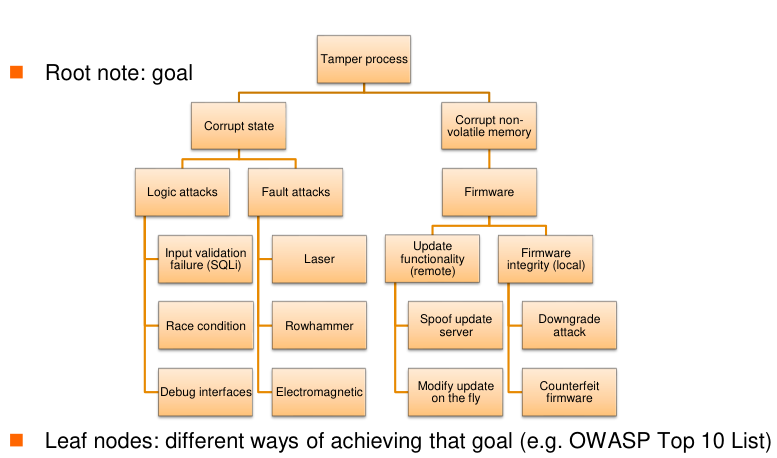

Attack Trees: Set the root node as the goal (obtaining root) and represent the attack as a tree, where the leaf nodes are different ways of achieving that goal.

Other popular threat models:

- OWASP IoT Vulnerabilities Projects

- LINDDUN: Privacy Threat Analysis Methodology

- Linkability: Being able to determine if two entities are linked

- Identifiability: Being able to identify a subject

- Non-Repudiation: Not being able to deny a claim

- Detectability: Being able to determine wheter a item exists

- Disclosure of Information

- Unawareness: Being unaware of consequences of sharing information

- Non-compliance: Not being compliant with legislation

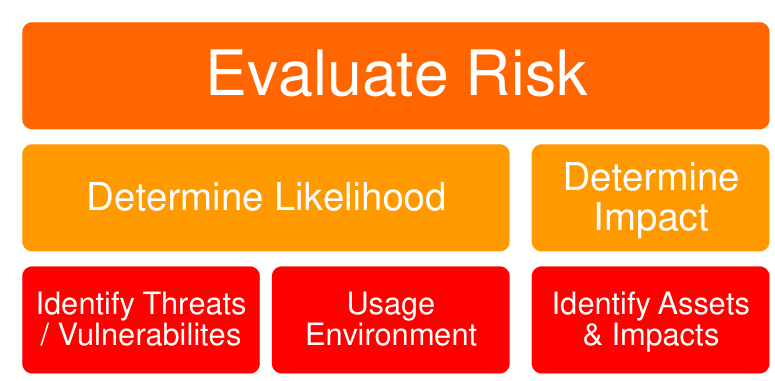

- Risk Analysis: Determine wheter identified threat can be neglected or what mitigation should be applied.

There are some general Scoring Systems:

- CWSS (Common Weakness Scoring System)

- CVSS (Common Vulnerability Scoring System)

- CWRAF (Common Weakness Risk Analysis Framework)

- Select CWEs that are related

- DREAD:

- Damage: Assess the damage result from the attack

- Reproducibility: How easy an attack can be reproduced

- Exploitability: Effort and expertise to mount the attack

- Affected: How many users would be affected

- Discoverability: Likelihood that a threat will be exploited

3. Unit 3: Security Architecture Design and Mechanisms

The architecture is a formal description and representation of a system, the components and the relationship to the enviroment.

The security architecture is a unified security design that addresses the necessities and potential risks involved in a certain scenario or enviroment. It contains the concepts, principles, structures and standards used to design implement, monitor and secure: operating systems, equipment, etc…

The purpose of the security architecture is to understand and manage the risks.

The security start at policy level. The Security Policy is a tool that dictates how sensitive information and resources are to be managed and protected.

3.1. Trusted Computing Base

The trusted computing base is a collection of hardware, software and firmware components that provide some form of security and enforce the policy.

The security perimeter is a boundary that divides the trusted from the untrusted.

The security model specifies the operational and funcitonal behavior of a system for security. There are many security models:

- Graham-Denning Model: Formal system of protection rules

- Information-Flow Model: Demonstrates data flows, communications channels…

- State-Machine Model : Abstract Math model

- Non-Interference Model: Subset of information-flow model that prevents subjects operating in one domain from affecting each other in violation of security policy.

- …

Access is the flow of information between a subject and an object.

Access capability is what a subject can do to an object

Access control governs the information flow.

- Discretionary Access Control (DAC): is where the information owner determines the access capabilities of a subject to what objects

- Mandatory Access Control: Is where the access capabilities are predetermined by the security classification of a subject and the sensitivity of an object

3.2. Secutiy By Design

Make security an integral part of design phase of product development.

- Design your product with the fact in mind that it will be attacked

- Integrate security controls from the beginning

- Make security tests a critical part of development process

Goals:

- Spell out threats to a system

- Provide system architecture mitigating as many threats as possible

- Employ design techniques, forcing developers to consider security with every line of code

- Enforce necessary authentication, authorization, confidentiality, data integrity..

- Design a robust security archivecture

- Take malicious practices for granted.

Design techniques: Minimize attack surface.

Principle of least privilege: SW processes and their authorized users shall be granted only those privileges required for them to carry out their specified function.

Principle of least functionality: Disable/Uninstall unused functionality, protocols, ports, services, etc. Limit the SW functionality to the strictly needed.

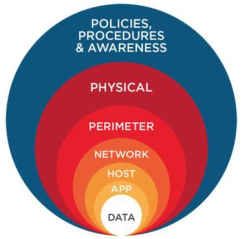

Defense in depth: Multiple layers of security controls in place. If one mechanism fails, another will be in place.

Ensure confidentiality: Encrypt sensitive data and use standarized algorithms.

Ensure integrity: Use secure boot, design secure update process, verify integrity.

Ensure authenticity and non-repudiation: Use standarized protocols and algorithms

Secure Communication: Never communicate over insecure channels, verify authenticity of data

3.3. Defensive programming

Defensive Programming is a form of defensive design intended to ensure the continuing function of a piece of software under unforeseen circumstances.

- Source code should be readable & understandable

- Make SW behave predictable even in case of unexpected inputs/actions

- Assume that SW will be attacked, use safe functions.

Key principles:

- DRY (Don’t Repeat Yourself)

- SOLID:

- S: Single Responsibility Principle

- O: Open for extension, closed for modification

- L: Liskov substitution principle

- I: Interface segregation principle

- D: Dependency Inversion Principle

- CQRS (Command Query Responsibility Segregation): Segregate operations reading data from operations updating data

3.4. Security Mechanisms

- Cryptography

- Protocols: TLS, IPsec, Wireguard

- Privacy Techniques:

- Hardware Security: TPM, Hardware Security Modules, Secure Elements, Trusted Execution Zones, Root of Trust

- Authentication and Authorization

- Key Management

4. Unit 4: Security Testing, Evaluation and Certification

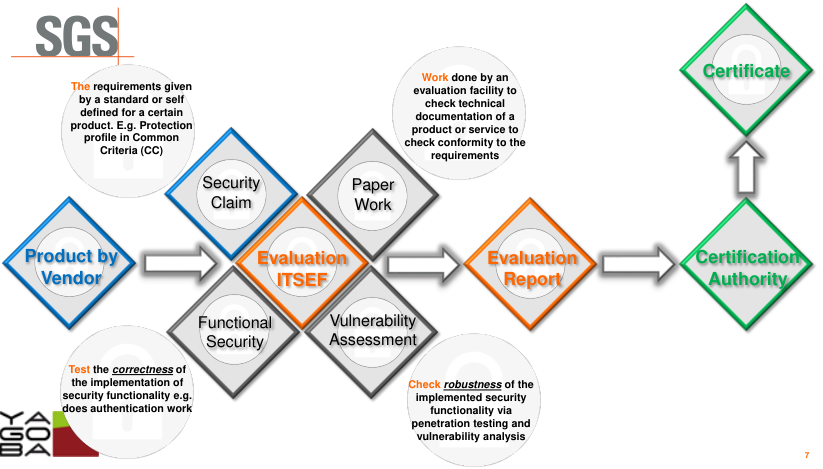

A product is evaluated by:

- Security claim: The requirements given by a standard or self defined for a certain product

- Paper Work: Check tecnical documentation of a product to check it’s conformity to the requirements

- Functional Security: Check the correctness of the implementaton of security functionality

- Vulnerability assessment: Check robustness of the implemented securty functionality (pentest)

In the Common Criteria:

- Security Claim: Given by the vendor. In other schemes partially given (by the specification/standard)

- Paper Work: Hundreds of pages

- Functional Security: Independent Functional Testing

- Vulnerability Assessment: Implementation review, Vulnerability Analysis (VA) plan, VA Testing (pentest), etc.

The security claim evaluation effort increases with the increasing functionality and increasing assurance (the depth of the evaluation).

4.1. Assurance Levels

High: Review of technical documentation, testing to demonstrate the products have the security funcitonalities properly implemented, an assessment of the resistance of skilled attackers (big pentest).

Substantial: Review of technical documentation and medium pentest

Basic: Review of technical documentation or minimal pentest.

4.2. Regulations

- EU Cybersecurity Act

- GDPR

- California Consumer Privacy Act

- Common Criteria

4.3. Security Testing

Functional Security: Demonstration that your security is correctly implemented. Done by functional testing. Robustness: Demonstration that the way how your security is implemented, cannot be bypassed. Done by penetration testing, fuzzying, etc

4.4. Common Criteria

Common Criteria is an international set of guidelines and specifications developed for evaluating information security products, specifically to ensure they mett an agreed-upon security standard.

It consists of:

- CC Part 1: Introduction and General Model

- CC Part 2: Security Functional Requirements (SFRs)

- CC Part 3: Security Assurance Requirements (SARs). Also presents the evaluation criteria for PPs and STs and present seven pre-defined assurance levels.

- CEM (Common Evaluation Technology)

Wording:

- TOE: Target of Evaluation. The product.

- ST: Security Target. The security claims made for the product.

- PP: Protection Profile. Describes a type of TOE, for example firewalls, smartcards, etc.

- Can be used as a template, then if the PP is certified, the ST can claim conformance to the security target.

- Written by a community seeking consensus regarding a TOE

- By a goverment specifyin its requirements as part of an acquisition process

- SFR: Set of Functional Components

- SAR: Set of Assurance Components

- EAL: Evaluation Assurance Levels

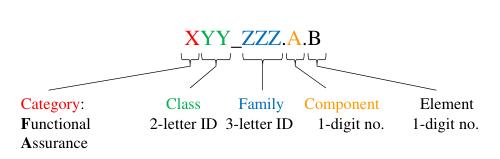

4.4.1. Format of Common Criteria

Examples: FIA_UAU.2.1, etc.

Requirements can have dependencies between them, and can be hierarchical to other components of the same familiy.

Each one, accept some modifications such as:

- Assignments: Specification of parameters

- Selection: Choose from list

- Refinement: Addition of details

- Iteration: Allow a component to be used more than once

4.4.2. Evaluation Assurance Level

EALs are predefined packages of assurance components. All predefined packages fulfill the dependencies.

- EAL1: Functionally tested

- EAL2: Structurally tested

- EAL3: Methodically tested and checked

- EAL4: Methodically designed, tested and reviewed

- EAL5: Semiformally designed and tested

- EAL6: Semiformally verified design and tested

- EAL7: Formally verified and tested

Packages can be augmented with a special ’+’ character

5. Unit 5: Penetration Testing on the Web

Stages:

- Contract

- Scoping: Define what can be targeted and what not

- NDA: Non Disclosure Agreement, legally signed document to specify that you cannot freely share your findings.

- PTA: Permission to Attack, legally signed document to specify that you can attack the scoped infrastructure

- Specify what wou will be doing, for example, checking OWASP Top 10.

- Specify exact version of the target

- Point that a pentest does not reveal all vulnerabilities

- Discovery

- Enumeration of hosts

- Enumeration of services

- Attack

- Reporting

5.1. Attack

OWASP Top 10:

- Injection: SQLi Injection for Example

- Broken Authentication: Easy bruteforce, bad sessionID, bad sessID validation

- Sensitive Data Exposure: Plaintext data transmission, weak crypto

- XML External Entities: Sending malformed XML (w/ Javascript, etc.)

- Broken Access Control: Bypass restrictions, modifying JWT, etc

- Security Misconfiguration: Generalistic. Readable .htaccess, .git access

- Cross-Site Scripting (XSS): Reflected (temporal), stored (permanent), DOM (dynamic)

- Insecure Deserialization: Bad parsing

- Using Components with Known Vulnerabilities:

- Insufficient Logging and Monitoring:

CVEs: Database of known vulnerabilities for many products

CWEs: Comprehensive List of “bad practices” in implementation

5.2. Report

We have to:

- Extract version/configuration that was tested

- Provides a detailed explanation of the tests performed

- States the results

- Points out the limitations of the test

Structure:

- Executive summary

- Introduction

- Results summary

- Testing Summary

- Detailed Results

- Recommendations

6. Unit 6: Security Testing Mobile Apps

Mobile App Lifecycle:

- Planning

- Analysis

- Design

- Implementation

- Testing and Integration

- Manteinance

Different Testing Methods:

- Static Analysis

- Reverse Engineering

- Examining binaries, source code, etc.

- Dynamic Analysis

- Runtime Inspection

- Traffic Inspection

6.1. OWASP MASVS

OWASP Mobile Application Security Verification Standard can be used by mobile software developers to develop secure mobile applications.

It’s like a list of requirements, for example:

- No sensitive data is written to application logs

It has the following chapters:

- V1: Architecture. Design and Threat Modeling Requirements

- SDLC (Security Development Lifecycle)

- Threat Modelling

- V2: Data Storage and Privacy Requirements

- Use system storage facilities

- No data stored outside app container

- No data leakage via logs

- V3: Cryptography Requirements

- Apply crypto properly

- No hardcoded keys

- V4: Authentication and Session Management Requirements

- Password policy

- Login to remote services

- Proper authorization/authentication

- V5: Network Communication Requirements

- Use TLS

- X.509 Certificate Validation

- V6: Enviromental Interaction Requirements

- App folow best practices

- Minimum set of permissions

- User input validation

- V7: Code Quality and Build Setting Requirements

- Use proper code practises, for example stack protection

- No debug builds

- No dead code

- Proper exception handling

- V8: Resiliency Against Reverse Engineering Requirements

- Sandbox detection

- Obfuscation

- App detects root on device

6.2. OWASP MSTG

OWASP Mobile Security Testing Guide is a comprehensive manual for testing the security of mobile apps. It describes processes and techniques for verifying the requirements listed in the MASVS

It’s the test cases for the previous list of requirements.

It provides the following verification levels:

- MASVS-L1: Standard Security

- Basic Applications

- MASVS-L2: Defense-in-Depth

- Sensitive Applications

- MASVS-LR: Resiliency Against Reverse Engineering and Tampering

They can be combined, for example L1 + LR, L2 + LR.

6.3. OWASP Mobile Top 10

- Improper Platform Usage

- Improper use of TouchID, Keychain, etc.

- Insecure Data Storage

- Insecure storage in filesystem

- Unencrypted mysql

- Log files

- Insecure Communication

- No usage of TLS

- Traffic can be sniffed

- Insecure Authentication

- Bypass authentication

- Insufficient Cryptography

- Weak cryptography

- Hardcoded keys

- Insecure Authorization

- Can execute higher privilege actions

- Client Code Quality

- Typical memory leaks, overflows

- Code Tampering

- Malware, security bypass, etc

- Ensure code integrity

- Reverse Engineering

- Get information like strings

- Reveal ciphers, keys, etc.

- Extraneous Functionality

- Misc. functionality like hidden switches

7. Unit 7: IoT Testing

Testing IoT devices is basically a blackbox evaluation. They lack of standards and regulations, very limited testing time and a limited number of samples.

The flow of hardware IoT analysis is the following:

- Identification of Samples

- Check that what you are going to test is actually the TOE that is intended to be tested

- OSINT Analysis

- Explore public firmware, papers, reports, data sheets, user manuals

- Make the blackbox a bit more greyish

- Remove Casing

- Can be trivial or very complex

- Limited samples

- Component Identification

- Denote main electronic components (back to OSINT)

- Penetration tests

- Do whatever the attacker does

- Create a test plan

7.1. Hardware Pentesting Examples

Root of Trust: Foundation for all security related functions

Bootloader relies on root of trust for loading firmware image

Secure Boot Flow:

- Initialize Hardware

- Initialize Flash

- Copy from flash to SRAM

- Verify copy in SRAM

- Jump to SRAM or Fail

How to evaluate the bootlaoder? Change the firmware image, perform glitching attacks, etc.

TEE (Trusted Execution Enviroment) is multiple hardware and software components that protects the TEE from real world. Can run trusted applications (TA)

8. Unit 8: Fuzzing

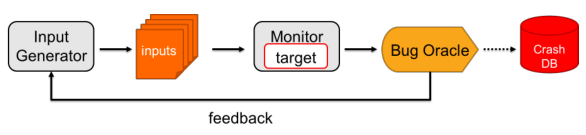

The idea of fuzzing is to automate a way to find security vulnerabilities.

There are a few types of input generation:

- Random: Basic input validation checks will reject inputs

- Mutation/Template Based: Modify valid inputs to create testcases

- Generation Based: Generate input from scratch, understand the protocol, the API, etc.

Then we can monitor the target:

- Crashes, hangs

- Sanitizer output

Also, we can collect the feedback we receive from the “Bug Oracle” and improve the generaton of test cases. This way we can trigger hard-to-reach code paths.

The coverage-based fuzzing has the goal of traversing as many program states as possible. We can do it with:

- Counting executed blocks

- Count block transitions

- Binary instrumentation (with or without source code)

Hardware fuzzing can be more difficult:

- Device memory corruption are less visible

- Complex protocols and interface (USB)

- Performance

- No instrumentation

Protocol inference: Know the protocol to be able to generate generation base fuzz testcases.

9. Unit 9: Implementation of Attacks

We have two/three ways of perform the testing:

- Blackbox

- Evaluator gets the same information as consumer

- Unknown algorithm and countermeasures

- Same sample/similar to product

- No developer support

- Use any measures to break the device

- Attack the IT enviroment of the TOE

- Whitebox

- Evaluator has in-depth knowledge about internals

- Samples prepared for testing (opened, more functionality, etc)

- Support from the developer

- User behaviour is defined, enviroment is defined, configuration is defined, etc.

- Greybox

- A mix between the last two

If the white box attack is successful, is the device secure or not? Depends, dependent on attack potential.

What shall be tested?

- TOE (Target of Evaluation)

- Security Configuration

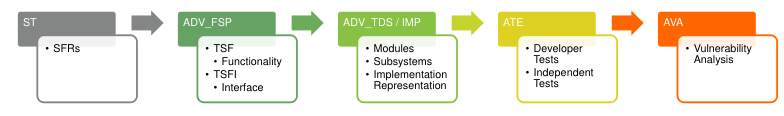

AVA Vulnerability Analysis. Here we have the simplified CC mapping between SFRs and AVA:

Typical Analysis Workflow:

- Implementation Analysis

- Modelling of the Attacked Algorithm

- Identification of Samples

- Preparation of Samples

- Establishing a Communication Channel

- Bypassing Countermeasures

- Target Identification

- Worst Case Analysis

- Attackk Paths & Methods

- Attack Rating

Rating: To reach AVAVAN.4 each attack path requires a rating of > 19. The rating is a final score depending on a series of factors and variables, then we sum everything and that’s the rating. If it passed or failed depends on AVAVAN level.

10. Unit 10: Security from Release to Decomissioning

The following attributes need to be regarded throughout the lifecycle:

- Integrity

- Confidentiality

- Authenticity

- Correct Usage

CM (Configuration Management) is a systems engineering process for establishing and mantaining consistency of a product’s performance, functional, and physical attributes with its requirements, design, and operational information throughout its life.

During development until release, CM shall:

- Ensure the product is correct and complete before it is sent to the consumer

- Ensure that no configuration items are missed during development

- Prevent unauthorised modification, addition or deletion of configuration items

10.1. Secure Update

Security is never static, newly found vulnerabilities result in new attack vectors. Security must be considered throughout the whole product lifecycle.

We have to update the devices deployed on field, and yes, the field is an untrusted enviroment. We need a secure in-field update mechanism.

- Assets: FS/SW image

- Threats: Tampering of image, leakage of image, loading unauthorised image, etc.

- Attackers are targeting FS/SW image, device during update process, backend infrastructure.

Main security requirements of the update process:

- Authenticity

- Integrity

- Confidentiality

- Integrity

How can we guarantee that updates are distributed only to uncompromised devices? How can we obtain the SW version of a device?

- Secure Boot: Guarantees that only trusted SW components are loaded during startup

- Remote device attestation: Allows remote service to verify device security state

- Sealing: Locks keys to specific, secure device state

The platform integrity state is gathered during the secure boot by measuring SW components loaded during startup. These components are only loaded after successful integrity check.

Platform Configuration Registers (PCRs), can securely store measured hash values and results are compared to expected values. New PCR values are obtained by hash-chaining old values with the extension value. Then we report signed PCR state to remote service.

Direct Anonymous Attestation (DAA) is a privacy protocol designed for TPMs. Backend checks signature & PCR values against reference values, on success, it knows that it is interacting with valid uncompromised platform.

10.2. Secure Market Surveilance

EU is regulating market surveillance

It allows to do spot checks of products

10.3. Secure Decommissioning

Device should support deletion of any stored sensitive data when end-of-life is reached.

For products intended to be part of a large system, decommissioning procedures shall be provided to allow for the product to be removed from the system.